Abstract

Abstract Abstract

Abstract

We present analyses and results from an eye-tracking study to investigate "What attracts human visual attention in semantically rich images ?". Eye gaze is a reliable indicator of visual attention and provides vital cues to infer the cognitive process underlying human image understanding. Our study demonstrates that visual attention is specific to interesting objects and actions, contrary to notion that attention is subjective.

If you use this database, please cite NUSEF as follows,

An Eye Fixation Database for Saliency Detection in Images, R. Subramanian1, H. Katti2, N. Sebe1, M. Kankanhalli2, T-S. Chua2, European Conference on Computer Vision (ECCV 2010), Heraklion, Greece, September 2010 PDF

1 Department of Information Engineering and Computer Science, University of Trento, Italy

2 School of Computing, National University of Singapore, Singapore

bibtex

@inproceedings{NUSEF2010,

author = {Subramanian Ramanathan and Harish Katti and Nicu Sebe and Mohan Kankanhalli and Tat-Seng Chua},

title = {An eye fixation database for saliency detection in images},

booktitle = {ECCV 2010},

address = {Crete, Greece}

year = {2010}}

Description

The NUSEF (NUS Eye Fixation) database was acquired from undergraduate and graduate volunteers aged 18-35 years (μ=24.9, σ=3.4). The ASLT M eye-tracker was used to non-invasively record eye fixations, as subjects free-viewed image stimuli. We chose a diverse set of 1024 × 728 resolution images, representative of various semantic concepts and capturing objects at varying scale, illumination and orientation, based on quality and aspect ratio constraints. Images comprised

everyday scenes from Flickr, aesthetic content from Photo.net, Google images and emotion-evoking IAPS [18] pictures.

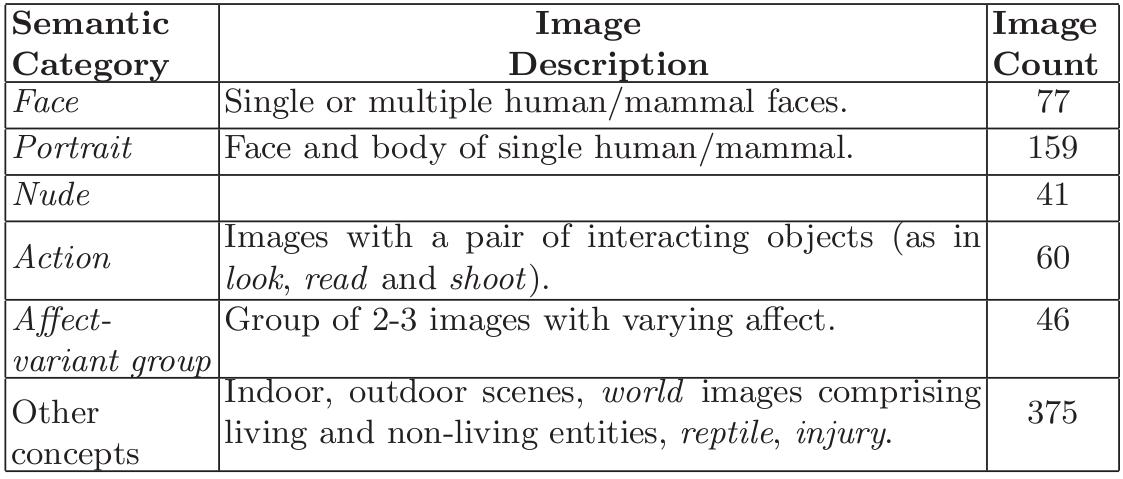

The following table summarises the diverse themes covered in the NUSEF eye-fixation database.

Copyright

and Fair Use

NUSEF is available for research purposes only and we adhere to Fair Use terms and conditions . IAPS images have their own copyright terms and images can be obtained from the NIMH CSEA by filling in their request form.

Downloads

Single archive containing (Images, Eye-fixation data, Ground truth segmentation, Visualisation code ) <60.9 MB>

Papers that have used NUSEF

Subramanian Ramanathan, Harish Katti, Raymond Huang, Tat-Seng Chua, Mohan S. Kankanhalli: Automated localization of affective objects and actions in images via caption text-cum-eye gaze analysis. ACM Multimedia 2009: 729-732, link

Harish Katti; Ramanathan Subramanian; Mohan Kankanhalli; Tat-Seng Chua; Nicu Sebe; Kalpathi Ramakrishnan: Making computers look the way we look: Exploiting visual attention for image understanding, ACM Multimedia 2010, link

Acknowledgements

The authors thank Prof. Why Yong Peng, Department of Psychology and Prof. Low Kok Lim, School of Computing, NUS for their support with eye-tracking equipment.